Large text-to-image diffusion models have achieved remarkable success in generating diverse, high-quality images.

Additionally, these models have been successfully leveraged to edit input images by just changing the text prompt.

But when these models are applied to videos, the main challenge is to ensure temporal consistency and coherence across frames.

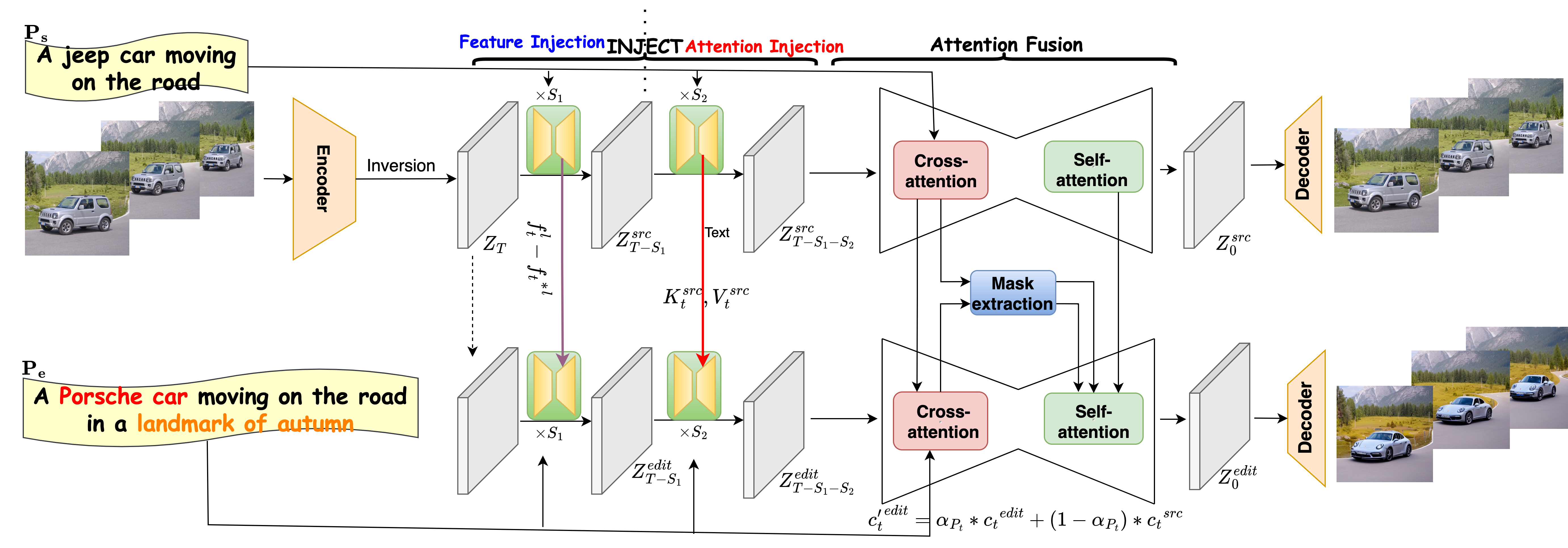

In this paper, we propose InFusion,, a framework for zero-shot text-based video editing leveraging

large pre-trained image diffusion models. Our framework specifically supports editing of multiple concepts with pixel-level

control over diverse concepts mentioned in the editing prompt. Specifically, we inject the difference in features obtained

with source and edit prompts from U-Net residual blocks of decoder layers. When these are combined with injected attention

features, it becomes feasible to query the source contents and scale edited concepts along with the injection of unedited parts.

The editing is further controlled in a fine-grained manner with mask extraction and attention fusion, which cut the edited part

from the source and paste it into the denoising pipeline for the editing prompt. Our framework is a low-cost alternative to one-shot

tuned models for editing since it does not require training. We demonstrated complex concept editing with a generalised image model

(Stable Diffusion v1.5) using LoRA. Adaptation is compatible with all the existing image diffusion techniques. Extensive experimental

results demonstrate the effectiveness of existing methods in rendering high-quality and temporally consistent videos.